Spacy Language

Visualize Space, with Language

Architecture, Language and Word Vectors

Architecture professors use... weird words, from abstract terminologies like deconstruction, spatiality, to out-of-place adjectives like rectilinear, robust. Usually these 'starchitect' words are composite, complex but ill-defined. As a result, it raises the bar of entry for anyone outside of the profession. In practice, such language rarely spread outside of the circle of architects. Most often, not even engineers and clients are included in these narratives. In my experience, such language posed a serious roadblock in my academic pursuit. With a language barrier, it was frequent that I walked out of a critique without valuable takeaways.

I kind of understand how the situation came to be. Spatial experiences could be so elusive and complex that words would often fail them. But we still have to talk about them, we still have to give critiques and carry on designing.

Therefore, in my thesis project, I was motivated to explore the connection between language and spatial experiences. Since quantitative research is rarely done in architecture due to the physical constraints, my initial thought was to utilize virtual reality to try to 'measure' some words. As I look into the problem and the context, I gathered some more insight from previous researches:

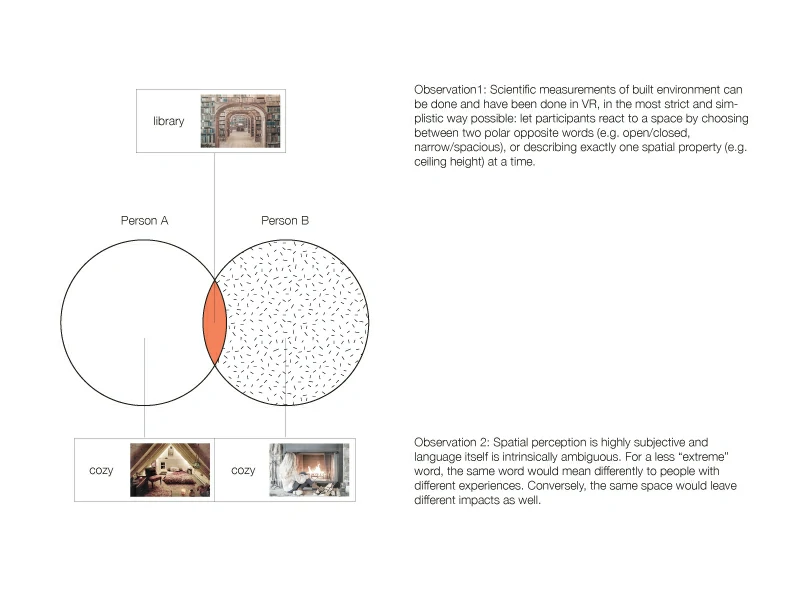

Scientific measurements of built environment can be done and have been done in VR, in the most strict and simplistic way possible: let participants react to spaces by choosing between two polar opposite words (e.g. open/closed, narrow/spacious), or describing exactly one spatial property (e.g. ceiling height) at a time.

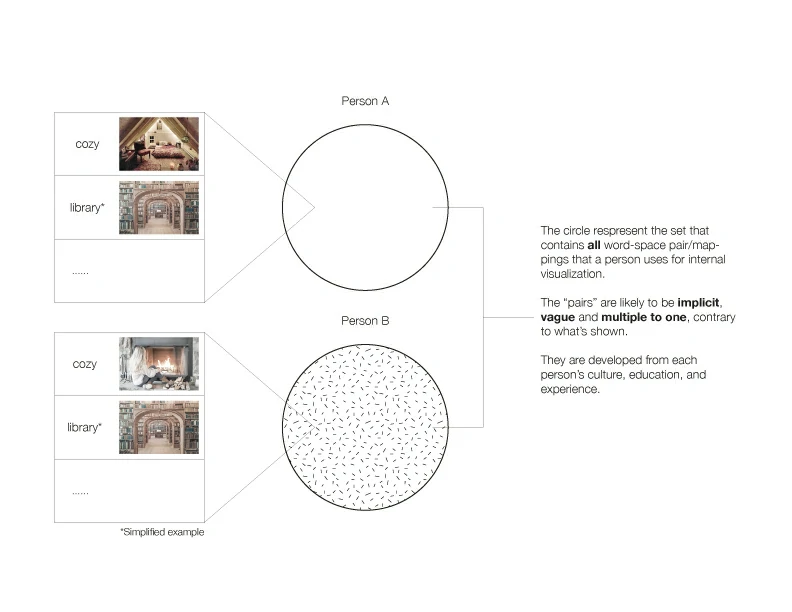

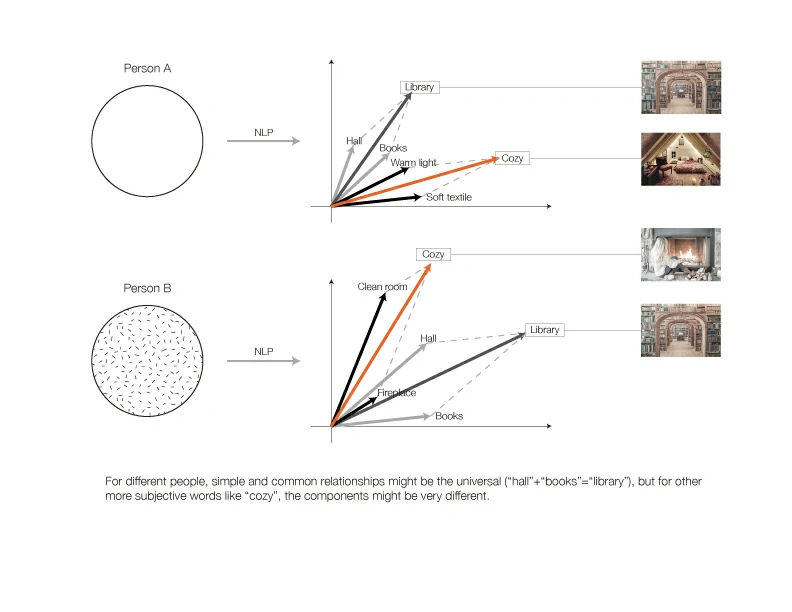

Spatial perception is highly subjective and language itself is intrinsically ambiguous. For a less extreme word, the same word would mean differently to people with different experiences. Conversely, the same space would leave different impacts as well.

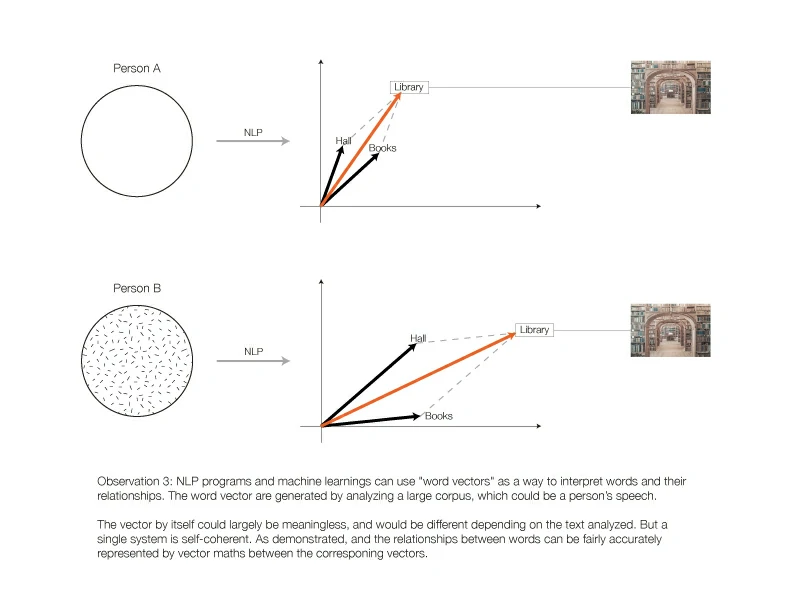

NLP programs and machine learnings can use 'word vectors' (word embeddings) as a way to interpret a word and predict the next word in a conversation. The vectors are assigned by an algorithm analyzing a corpus. The word vector by itself could largely be meaningless, and would be different depending on the system. But a single system is self-coherent, and the relationships between words can be fairly accurately represented by vector maths between the corresponing vectors.

The first insight means that a completely objective and quantitative experiment would be very inefficient in a design environment.

The second insight calls for emphasis on the uniqueness of each individual's language set and how it's linked to their spatial perception. Though I would love a program that can decode the 'hard' words used by my professors, such program probably can't exist, or won't be very useful.

The third insight, however, reveals that everyone's language pattern could be self-coherent and decodable, and even provides a means for encoding and decoding.

Proposal for a Design Tool

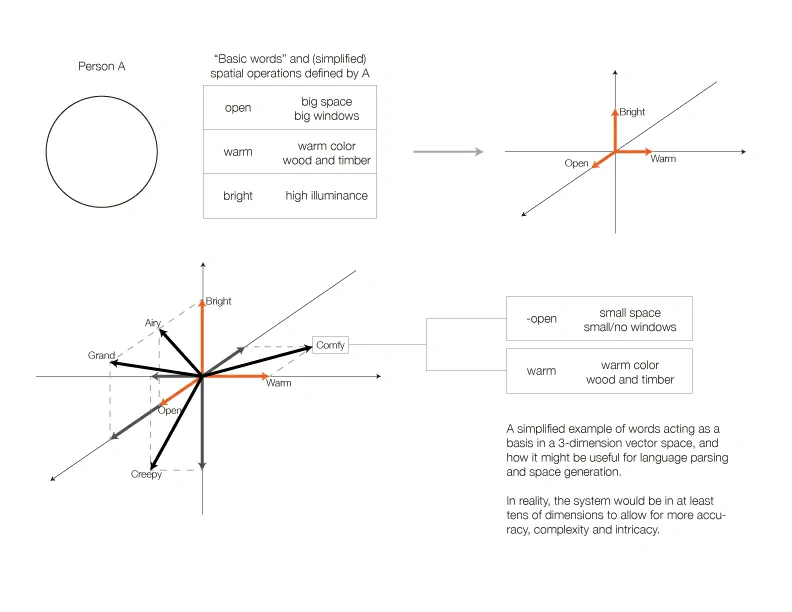

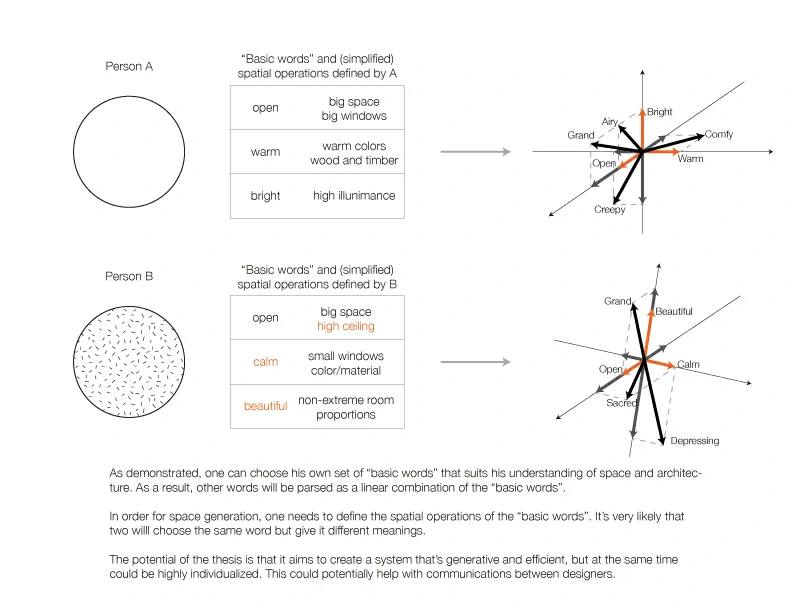

From linear algebra, we know that any vector space of dimension N can be have a set of N linearly independent vectors as its basis. This means that any vector within the vector space can be seen as a combination of the basis. From this, I propose:

Can I use a set of words as a basis for the vector space of word vectors?

Then, all language could be mapped onto a small set of 'basic words'. If each 'basic word' in the basis corresponds to a kind of 'basic space', we could potentially break down complex language to an easier combination of 'basic spaces'.

Obviously, this is a huge simplification. As discussed, the nature of language is complex and ambiguous. A sentence could also often be more than its words combined. Also, words with multiple meanings are conflated into a single representation. What's more, the vector spaces of word vectors are discrete rather than continuous, which makes operations like scalar multiplication rather questionable.

In summary, the proposed vector mapping is NOT meant to be taken as some 'magic' that oversimplifies the complexity of human language. It's also not meant to be a tool that could fully decode complex speech. However, due to the nature of this project being design thesis, rather than scientific research, I'm curious about the potential of such mappings in a design context. It would be interesting to see what this new system could bring to the table. I believe such proposal has the following merits:

Word vectors are still fairly accurate and self-consistent. With a tested algorithm that allows for synonyms and ambiguity in the mapping, the result could still be fairly understandable.

It's interesting to see what the inaccuracies of mapping could help create.

Every person will have a different and individualized vector space. This design tool could potentially help people visualize their different understandings and thusly improve communication between designers.

Affordance of VR and Mesh Geometries

After the thorough discussion of methodology, I need to build a proof-of-concept prototype to serve as an example of this new design tool. From the start of the thesis, I set my eyes on Virtual Reality, as I thought it would be an amazing opportunity to explore this technology. What's more, it would be a great medium for a gallery exhibition.

At the start, I was hoping to generate typical architectural spaces. But soon I realized that such spaces are not fully utilizing the potential of VR. A digital and virtual space means that physics don't need to apply, and space itself could be way more dynamic and interactive. A prototype built in VR should face those possibilities and tackle the challenges it brings.

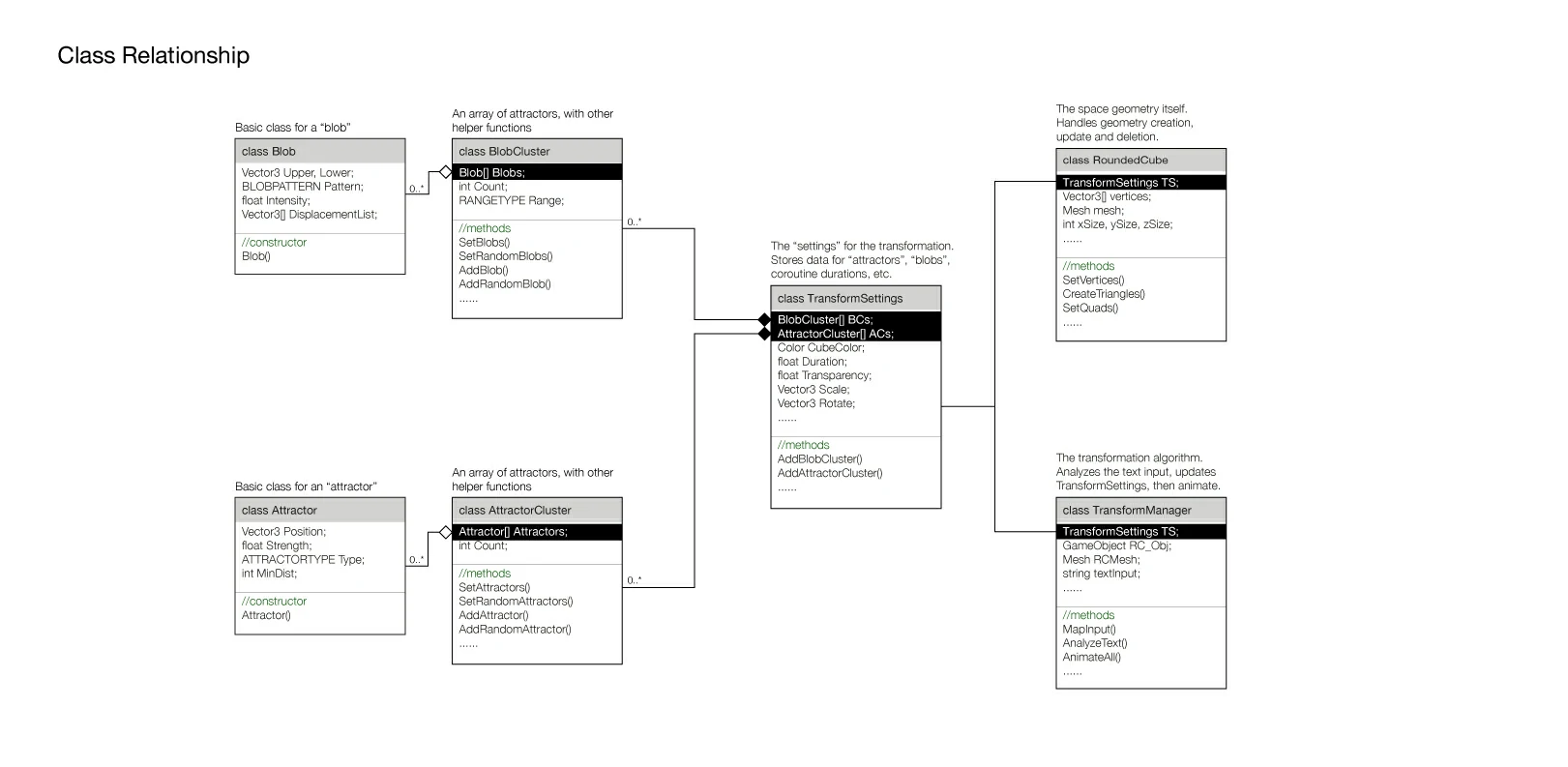

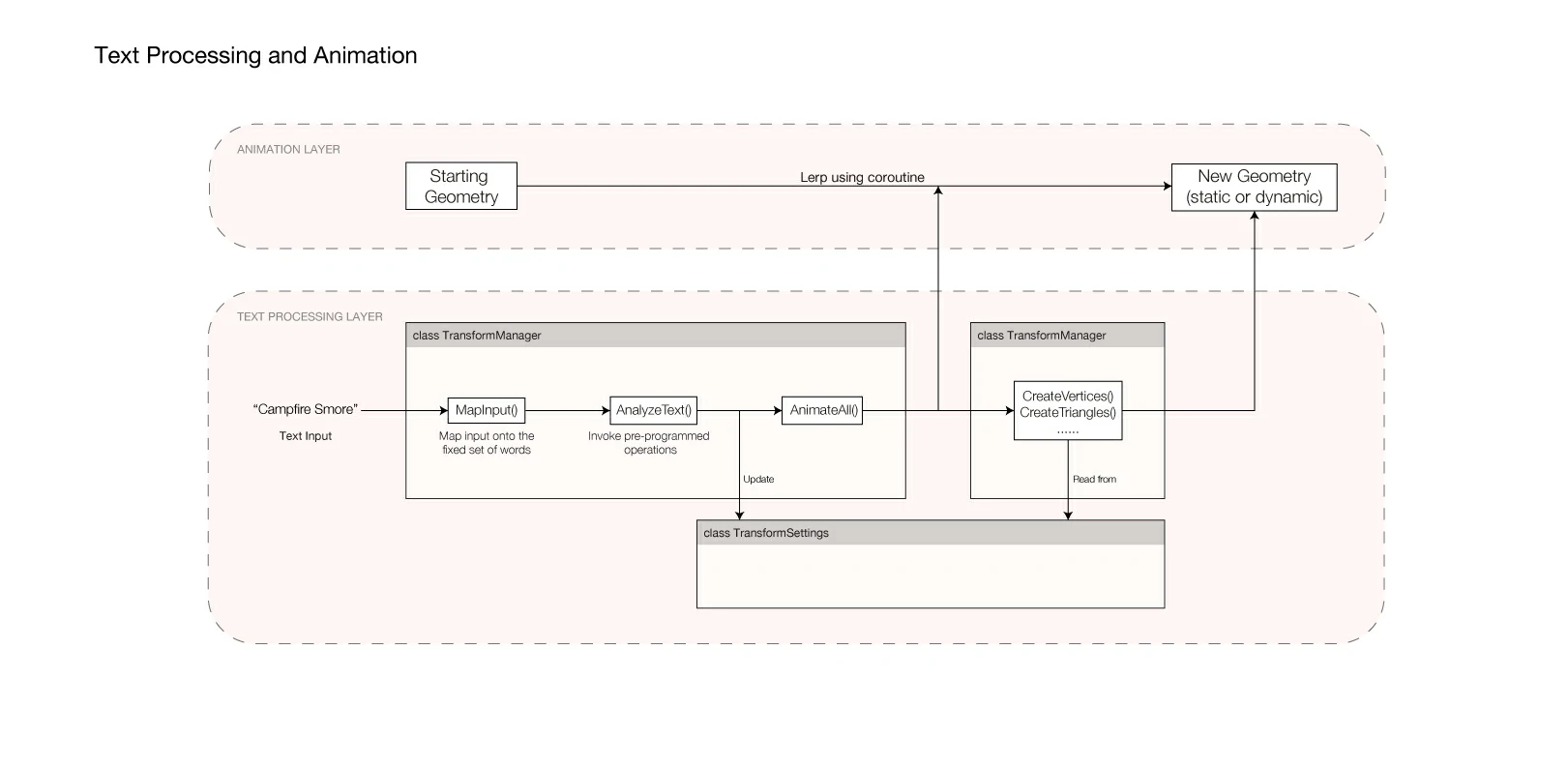

Most architects build their digital models with NURBS geometry. Such geometry is not native to most VR interfaces and would thusly require (usually manual) conversion. Therefore, for the prototype, I decided to see what can I do with the mesh geometry instead. After some research into Delauey, marching cubes and other mesh generation algorithms, I decided on using a procedurally generated rounded cube represent my 'space' as it gives me more control in an architectural sense. I designed three basic operations to configure the space:

'Sizing': This is the most basic operation. It changes the dimensions of the cube. It calculates the planar room proportions, as well as ceiling height.

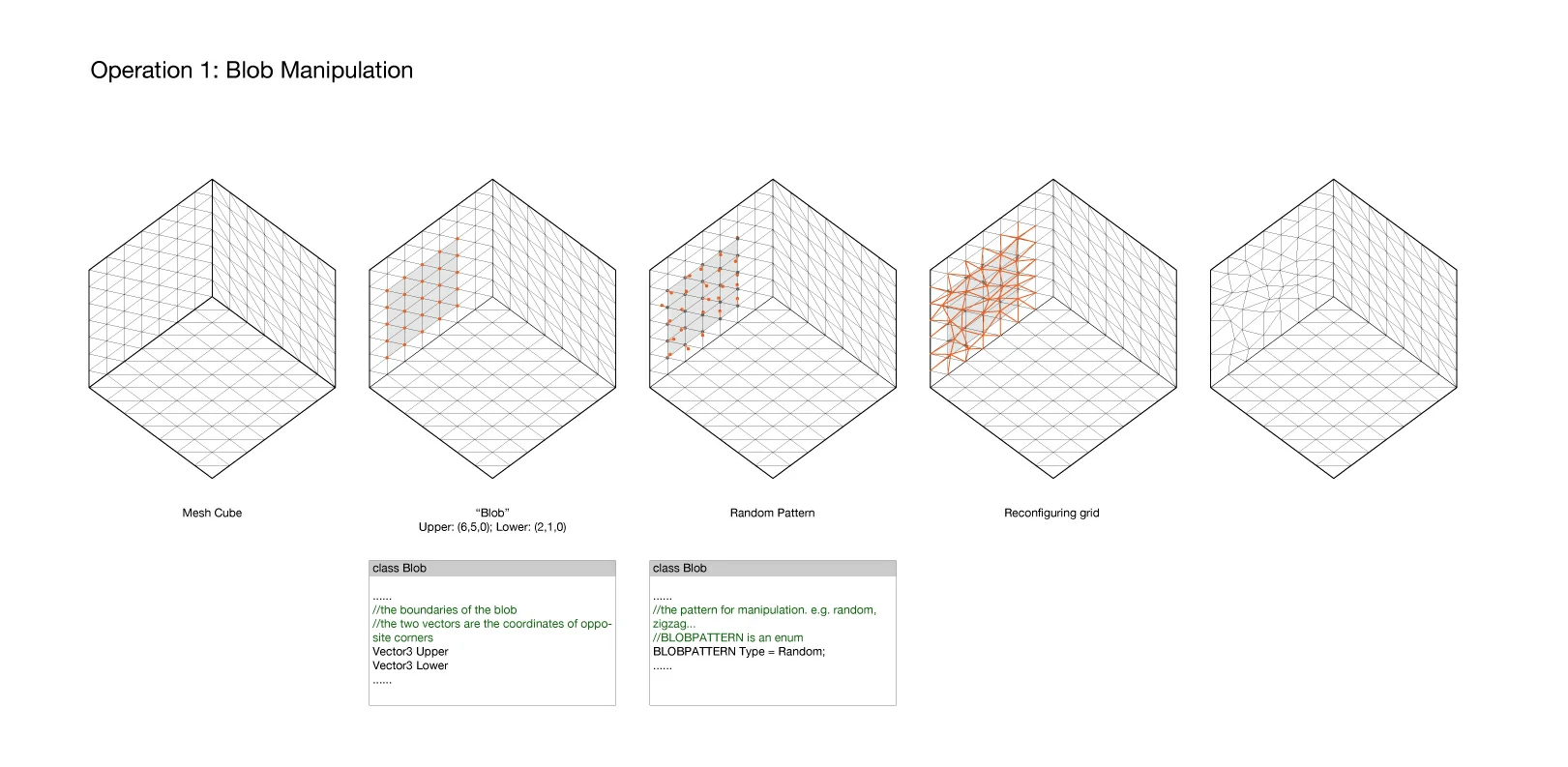

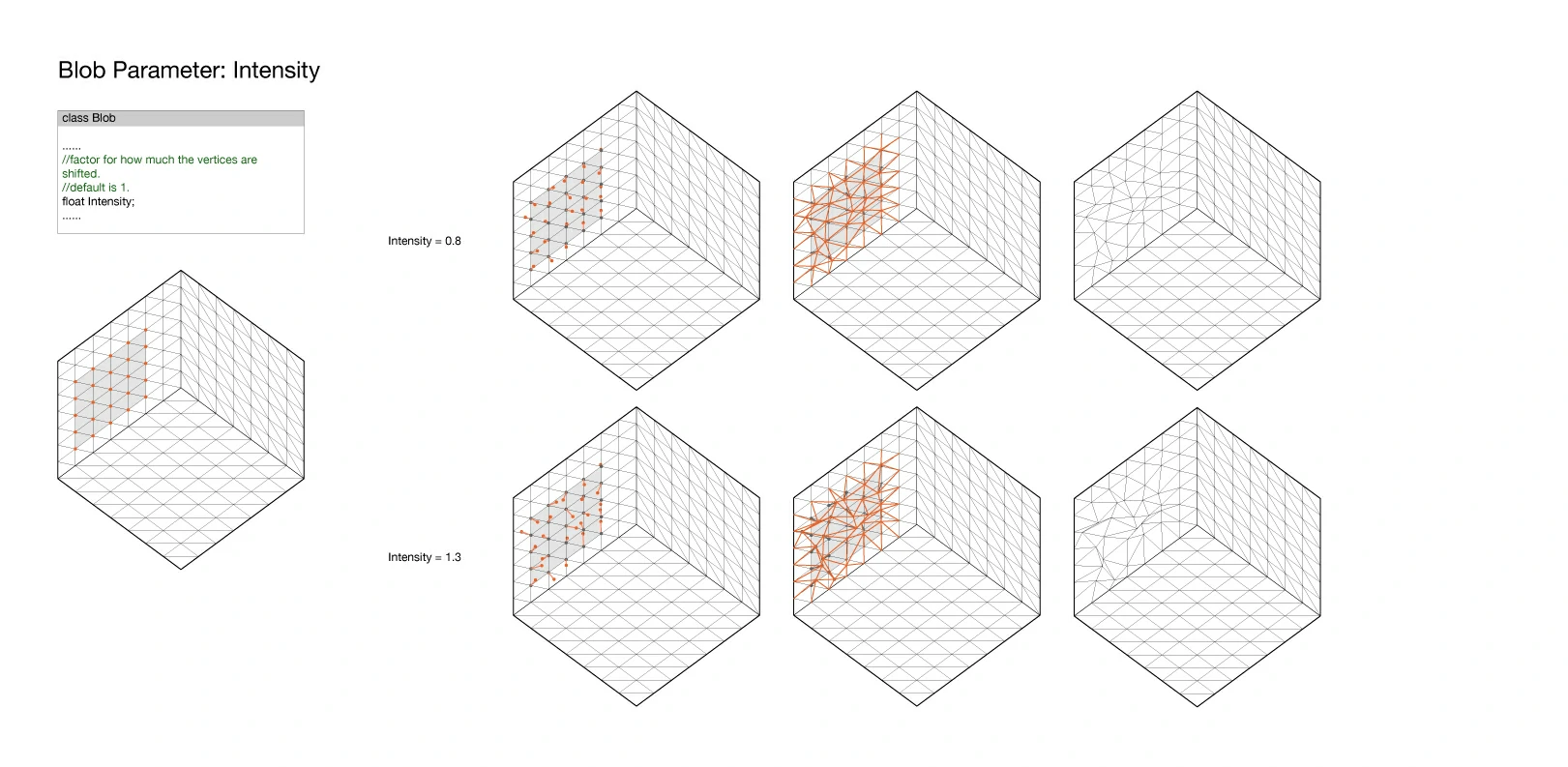

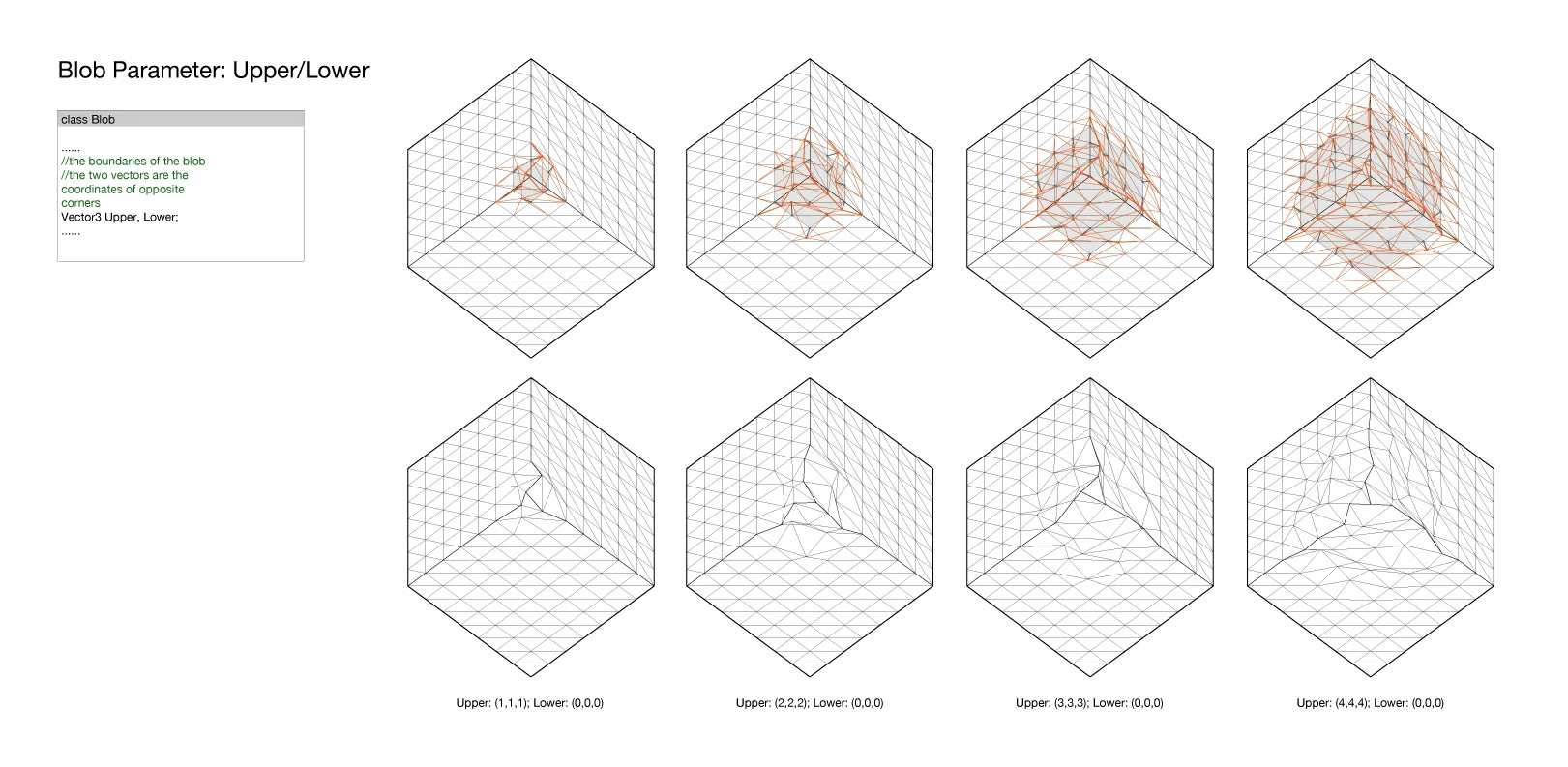

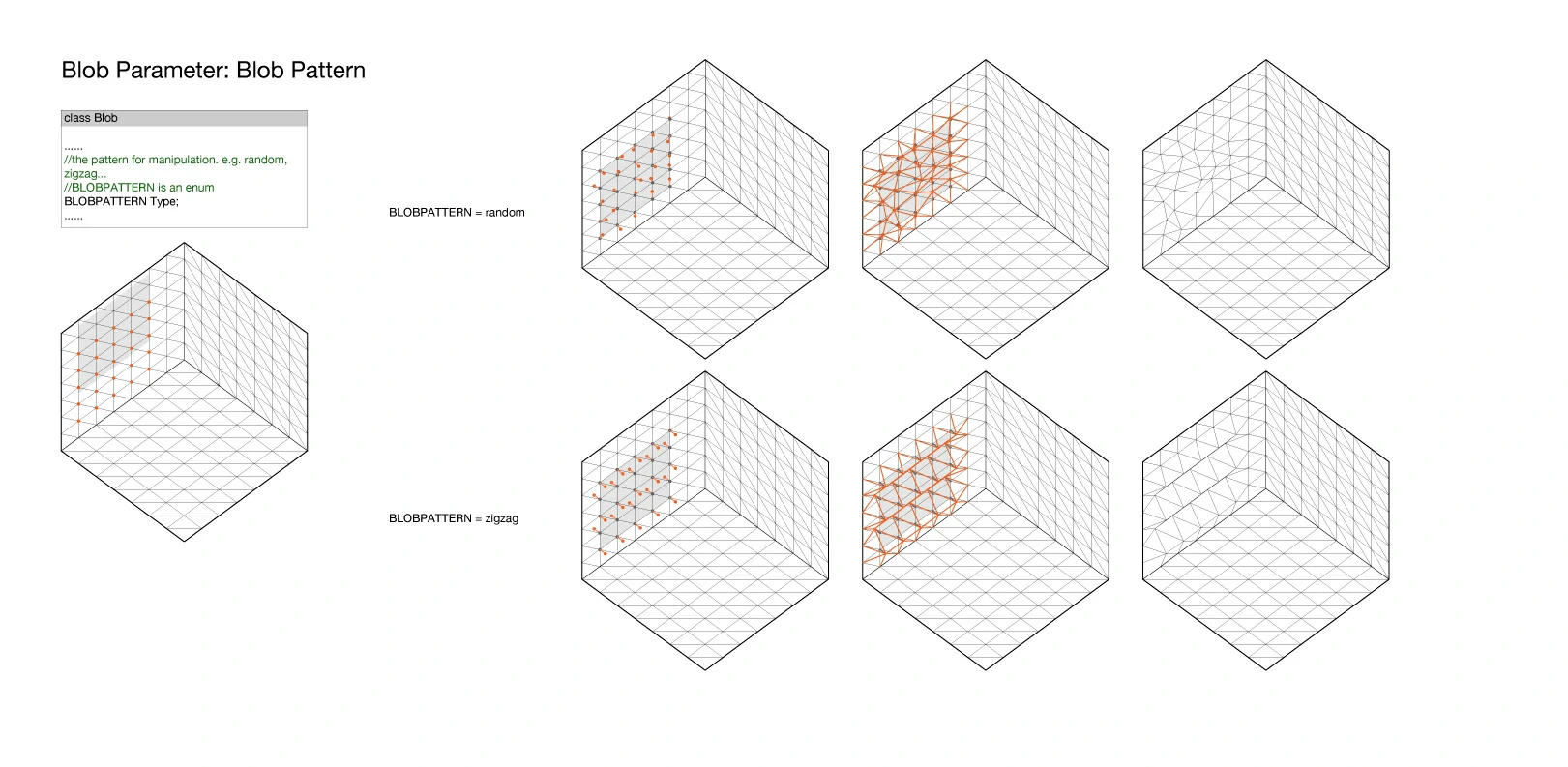

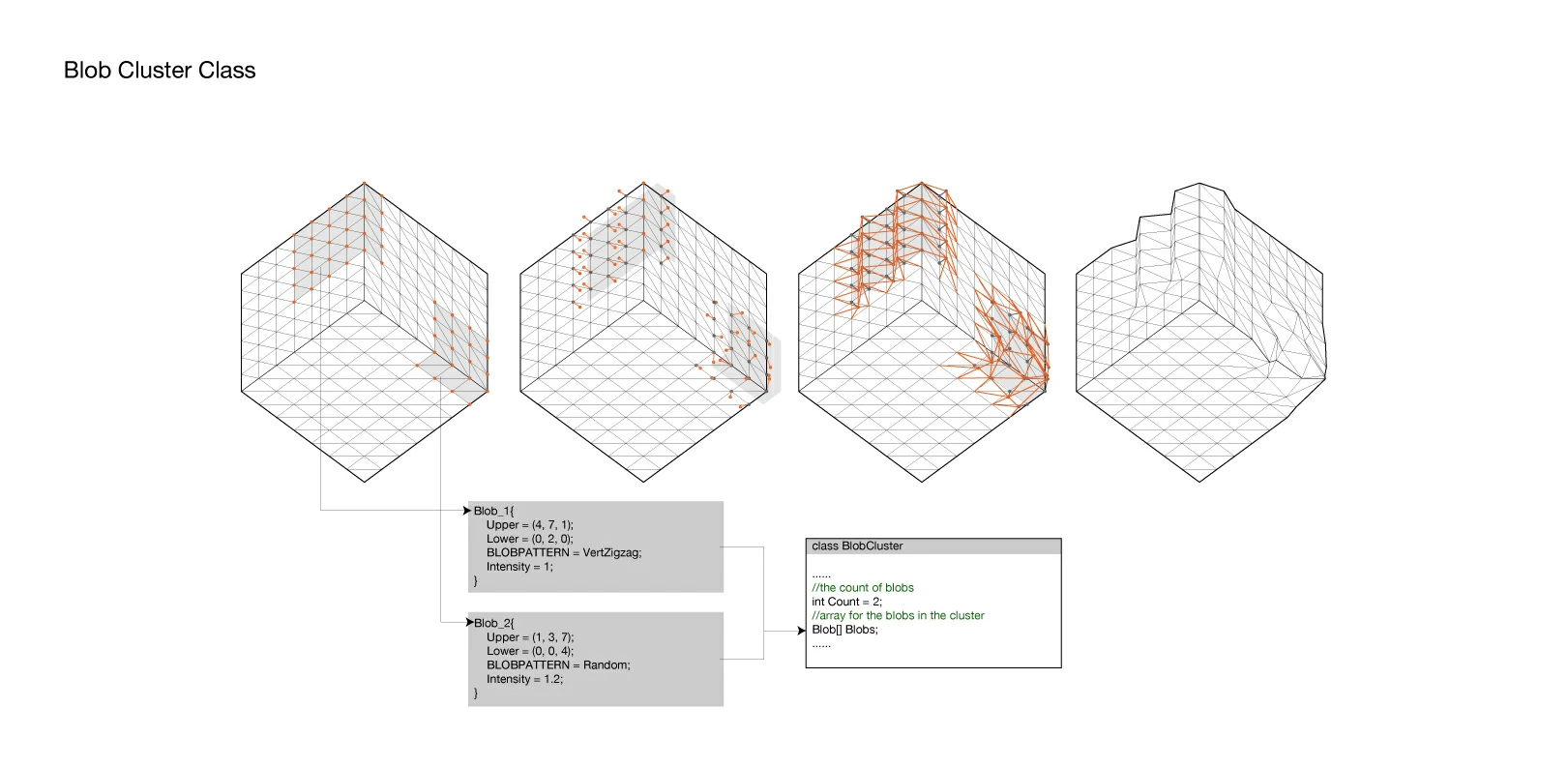

'Blob': A region on the surface of the cube. Defined by two vectors. Operations could be done to change the vertex colors, or shift the postions of all the vertices within the 'blob'.

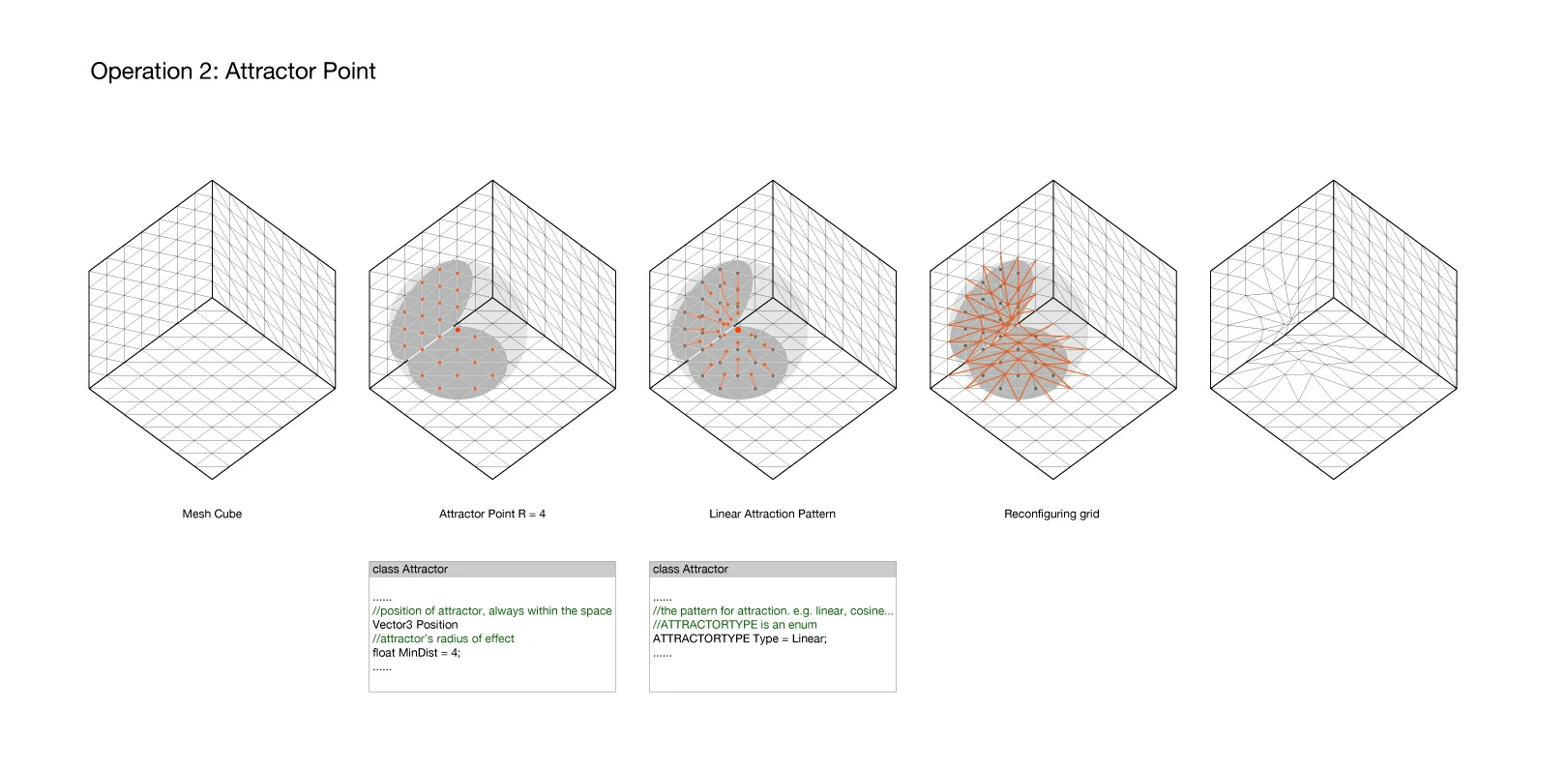

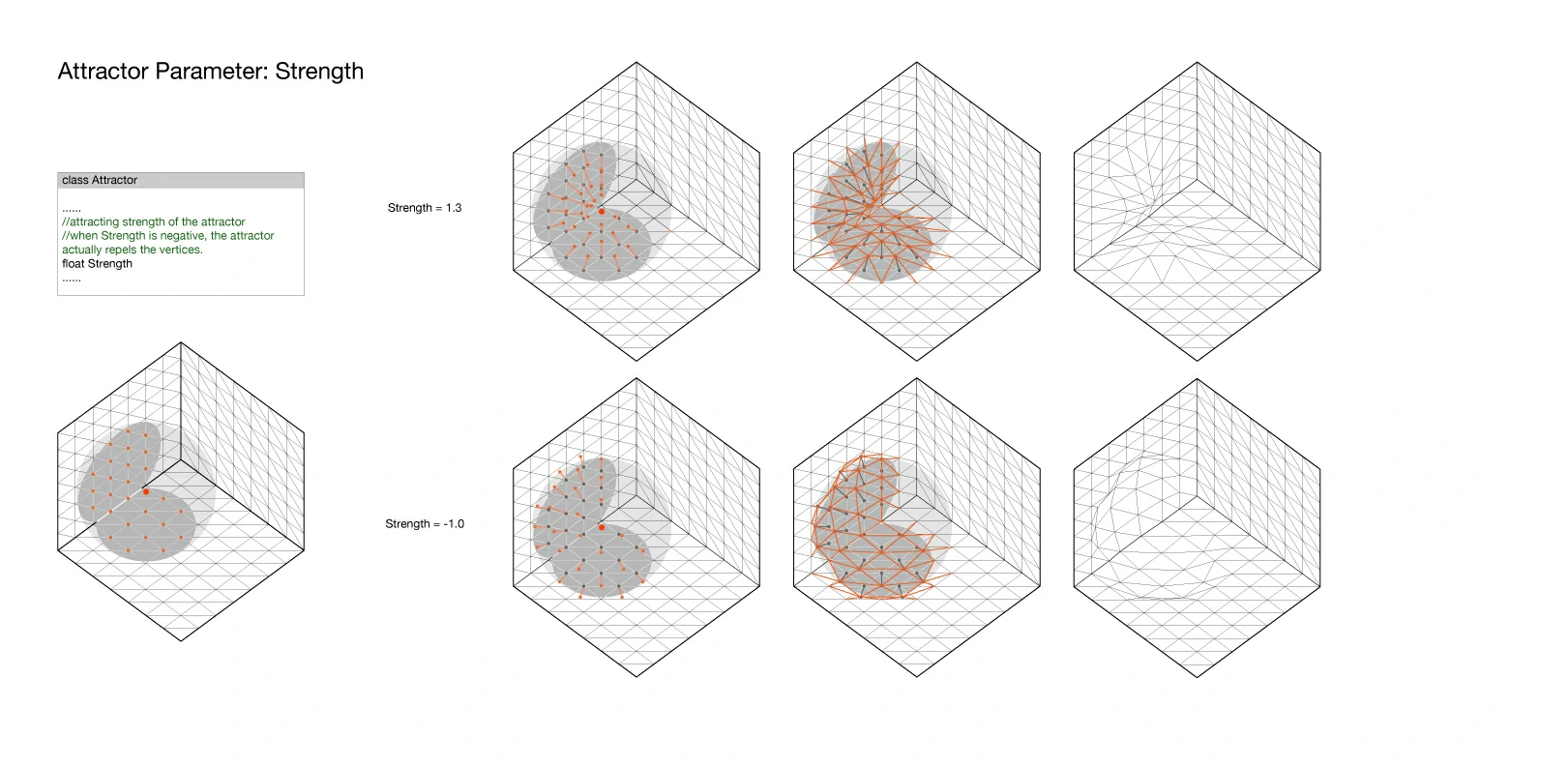

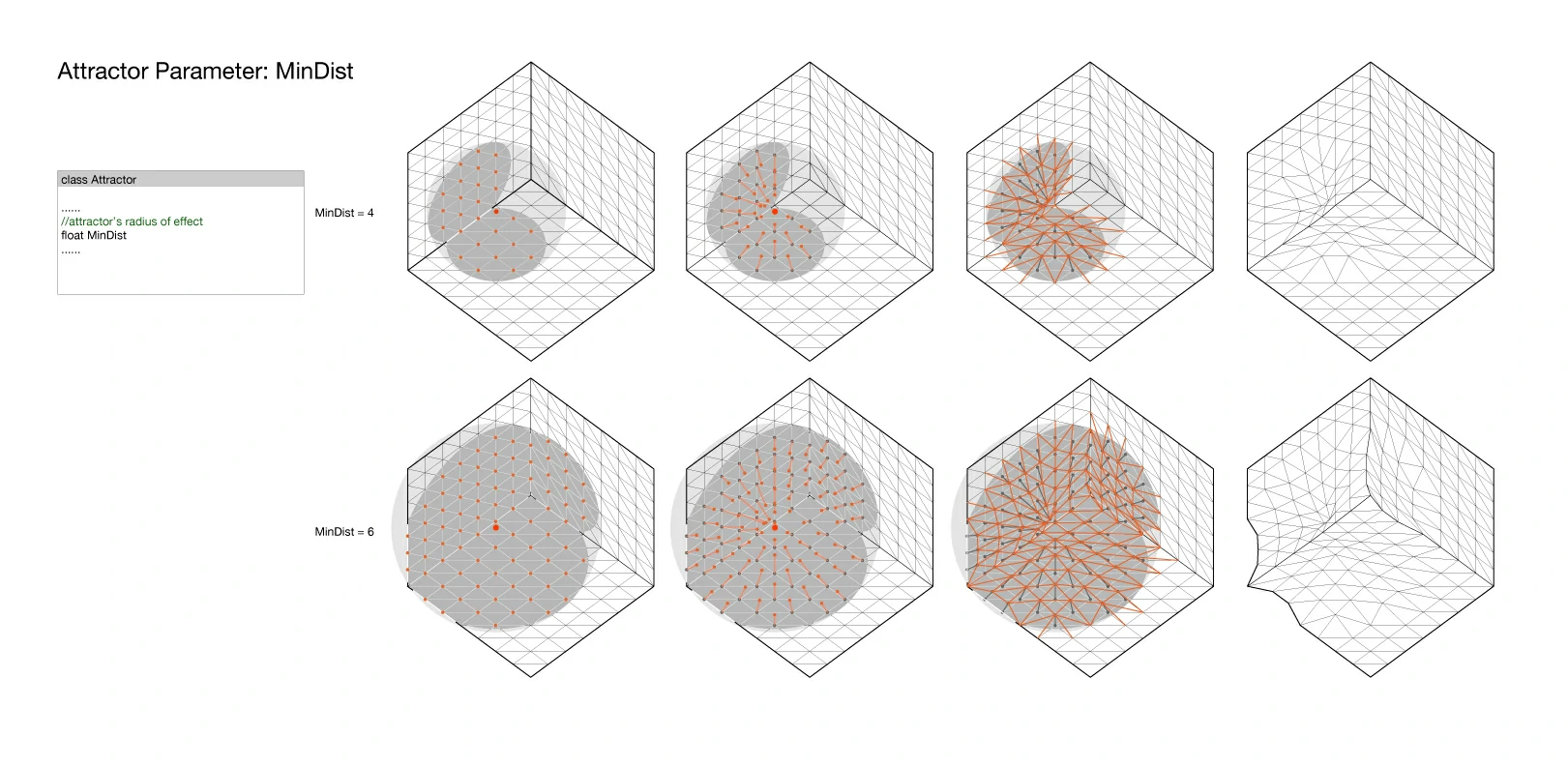

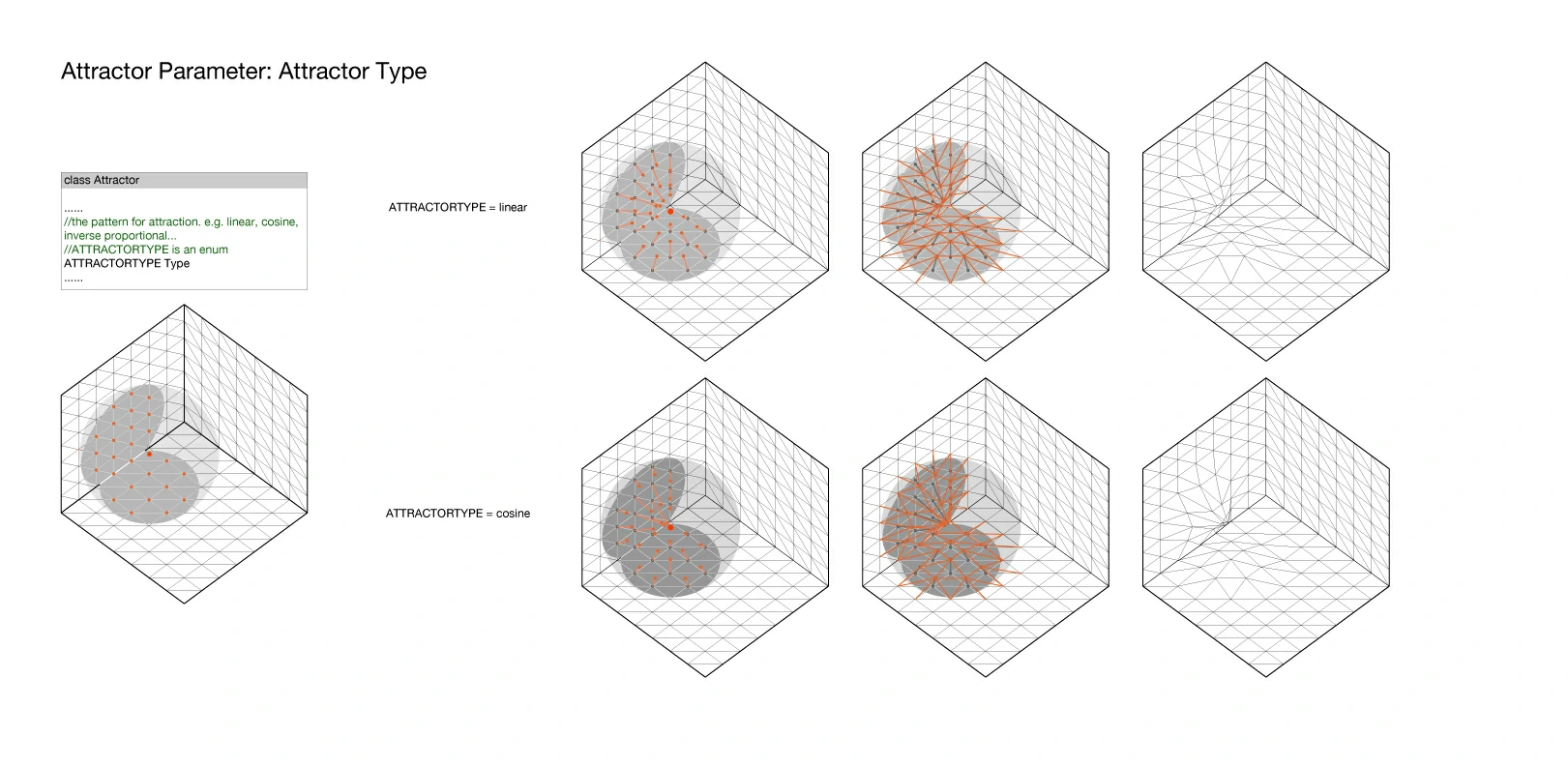

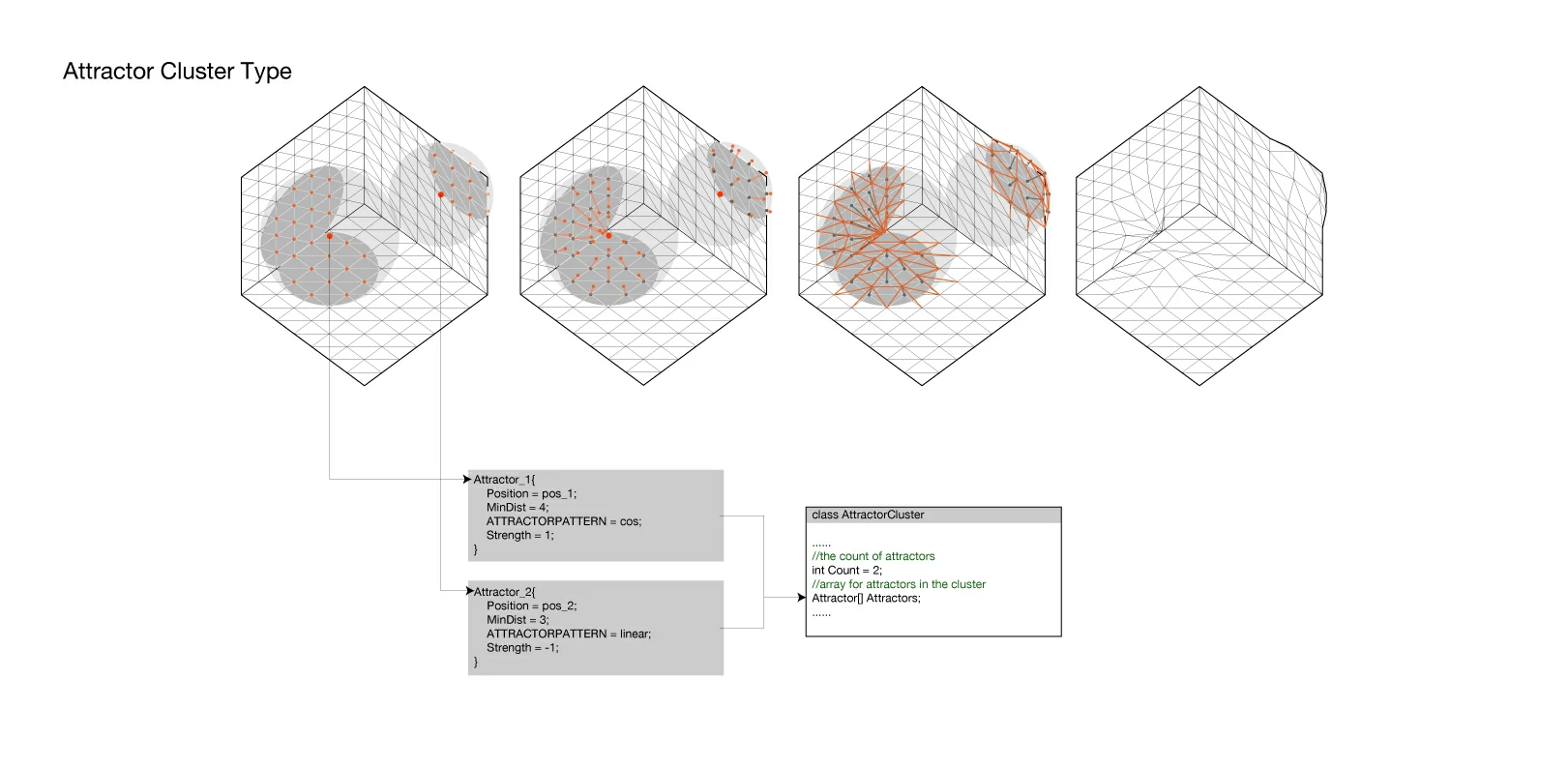

'Attractor': A point within the cube. All vertices close enough will be affected. Operations could be done to change the vertex color, or shift the positions of the 'attracted' vertices.

For the 'blob' and 'attractor', aggregated classes are established for groups of blobs or attractors, for easier management. By combining the various settings of these three operations, one can start to create some genuinely bizarre and dynamic spaces. For example, one can set the position of the user as the attractor point, which makes the space to react to the user's position in the space.

I exported the generated geometries and 3D printed them for the exhibition.